How to Monitor LLM Flows in Laravel with LangSmith and OpenTelemetry

Observability is key to understanding the behavior of your AI-powered applications. In this guide, we’ll walk you through integrating a Laravel application with LangSmith using OpenTelemetry. This straightforward setup will demonstrate how to manually send traces to LangSmith, helping you monitor critical steps in your LLM (Large Language Model) flows.

While we’ll briefly introduce OpenTelemetry, this guide focuses on its implementation rather than its architecture. For a deeper dive, check out the official OpenTelemetry documentation.

Last month, the LangChain team announced OpenTelemetry support in LangSmith, providing a great opportunity to trace LLM flows in languages with limited LLM tooling—like PHP.

Prerequisites

Before we begin, make sure you have the following:

- An OpenAI account with API key

- A LangSmith account with API key

- A Laravel development environment set up

Step 1: Initialize Laravel App

First, create a new Laravel project and add a custom command to serve as the entry point for our integration.

1composer create-project laravel/laravel langsmith-tracing && 2cd langsmith-tracing 3

Next, set up your database connection with your database credentials. After setting up the database, run the migrations:

1php artisan migrate 2

Finally, create a custom command to serve as the entry point for our integration:

1php artisan make:command LangsmithLogCommand 2

Next, let’s modify the LangsmithLogCommand to output a simple message for verification:

1<?php 2 3namespace App\Console\Commands; 4 5use Illuminate\Console\Command; 6 7class LangsmithLogCommand extends Command 8{ 9 /** 10 * The name and signature of the console command. 11 * 12 * @var string 13 */ 14 protected $signature = 'langsmith:log'; 15 16 /** 17 * The console command description. 18 * 19 * @var string 20 */ 21 protected $description = 'Log trace data to LangSmith for monitoring and analysis'; 22 23 /** 24 * Execute the console command. 25 */ 26 public function handle() 27 { 28 echo("Hello, traveler"); 29 } 30} 31

Run the command:

1php artisan langsmith:log 2

If everything is set up correctly, you should see: Hello, traveler.

Step 2: Configure OpenAI and Make an LLM Call

Next, install the OpenAI Laravel package:

1composer require openai-php/laravel && 2php artisan openai:install 3

After installing, add your OpenAI credentials to the .env file:

1OPENAI_API_KEY=<your_api_key_goes_here> 2OPENAI_ORGANIZATION=<your_organization_key_here> 3

Now, update LangsmithLogCommand to send an LLM request using the GPT-3.5-turbo model:

1<?php 2 3namespace App\Console\Commands; 4 5use Exception; 6use Illuminate\Console\Command; 7use OpenAI\Laravel\Facades\OpenAI; 8 9class LangsmithLogCommand extends Command 10{ 11 /** 12 * The name and signature of the console command. 13 * 14 * @var string 15 */ 16 protected $signature = 'langsmith:log'; 17 18 /** 19 * The console command description. 20 * 21 * @var string 22 */ 23 protected $description = 'Log trace data to LangSmith for monitoring and analysis'; 24 25 /** 26 * Execute the console command. 27 */ 28 public function handle() 29 { 30 $model = 'gpt-3.5-turbo'; 31 $prompt = <<<EOP 32 Your task is to provide the capitals of all six former Yugoslavian countries in the following JSON format: 33 [ 34 { 35 "country": "Country Name", 36 "capital": "Capital Name" 37 }, 38 ... 39 ] 40 41 Ensure the response strictly adheres to the JSON format and includes no extra text or explanation outside the JSON. 42 EOP; 43 44 $result = OpenAI::chat()->create([ 45 'model' => $model, 46 'messages' => [ 47 [ 48 'role' => 'system', 49 'content' => $prompt 50 ], 51 ] 52 ]); 53 54 $outputString = $result['choices'][0]['message']['content']; 55 $parsedArray = $this->parseOutputToArray($outputString); 56 57 foreach ($parsedArray as $item) { 58 echo("Country: {$item['country']}, Capital: {$item['capital']}\n"); 59 } 60 } 61 62 protected function parseOutputToArray(string $output): array 63 { 64 try { 65 $decodedOutput = json_decode($output, true); 66 67 if (json_last_error() !== JSON_ERROR_NONE) { 68 throw new Exception('Invalid JSON output: ' . json_last_error_msg()); 69 } 70 71 foreach ($decodedOutput as $index => &$item) { 72 if (!is_array($item) || !isset($item['country'], $item['capital'])) { 73 throw new Exception('Output does not match the expected schema.'); 74 } 75 76 $item['id'] = $index + 1; 77 } 78 79 return $decodedOutput; 80 } catch (Exception $e) { 81 throw new Exception('Error parsing output: ' . $e->getMessage()); 82 } 83 } 84} 85

In this step, we call OpenAI to get a list of countries and capitals in the specified format. After receiving the response, we parse it into an array of objects. While this is a simple example, in practice, you’d need to handle parsing more generically.

Run the langsmith:log command again and you should see something like this:

1Country: Slovenia, Capital: Ljubljana 2Country: Croatia, Capital: Zagreb 3Country: Bosnia and Herzegovina, Capital: Sarajevo 4Country: Serbia, Capital: Belgrade 5Country: Montenegro, Capital: Podgorica 6Country: North Macedonia, Capital: Skopje 7

In this flow, we have two key steps:

- LLM Call: The model retrieves the list of countries and capitals in the specified format. It’s important to note that LLMs can be unpredictable, and we can't guarantee that the JSON format will always be returned correctly.

- Parser: The parser ensures that the output matches the expected format. While it’s generally better to have a generic parser that can handle various structures, in this case, we’ve used a hardcoded parser tailored to transform the JSON string into the array of objects required for this specific scenario.

Observing the critical steps in our flow is crucial. In this case, we want to ensure that we can easily debug and fully understand the flow. To achieve this, we'll log the key events to LangSmith, adding observability to our LLM flow. This will help us track the process and quickly identify any issues, improving overall system transparency.

Step 3: Configure OpenTelemetry and LangSmith

Now, let’s set up OpenTelemetry in your Laravel project by installing the required packages:

1composer require open-telemetry/api open-telemetry/exporter-otlp open-telemetry/sdk php-http/guzzle7-adapter 2

Next, configure LangSmith integration by editing the config/services.php file:

1'langsmith' => [ 2 'url' => env('LANGSMITH_OTEL_URL'), 3 'api_key' => env('LANGSMITH_API_KEY'), 4 'project' => env('LANGSMITH_PROJECT'), 5], 6

Add the following environment variables to your .env file:

1LANGSMITH_OTEL_URL=https://api.smith.langchain.com/otel 2LANGSMITH_API_KEY=<your_api_key_goes_here> 3LANGSMITH_PROJECT=default 4

Step 4: Adding Traces to LangSmith

To start sending traces, we need to build a transport object and custom exporter. Here’s how you can set up the tracing functionality:

1$transport = (new OtlpHttpTransportFactory())->create( 2 config('services.langsmith.url') . '/v1/traces', 3 'application/x-protobuf', 4 [ 5 'Content-Type' => 'application/x-protobuf', 6 'x-api-key' => config('services.langsmith.api_key'), 7 'LANGSMITH_PROJECT' => config('services.langsmith.project'), 8 ] 9); 10 11$exporter = new SpanExporter($transport); 12 13$tracerProvider = new TracerProvider(new SimpleSpanProcessor($exporter)); 14$tracer = $tracerProvider->getTracer('app'); 15 16$rootSpan = $tracer->spanBuilder('YugoCitiesFlow')->startSpan(); 17$rootSpan->setAttribute('langsmith.span.kind', 'chain'); 18 19$rootSpan->end(); 20$tracerProvider->shutdown(); 21

Usually, for scalability, you would use the OpenTelemetry Collector to manage and process trace data efficiently. However, for this demonstration, we’ll send traces directly to LangSmith as the observability backend. If you're interested in learning more about the OpenTelemetry Collector and how it can enhance your observability setup, check out the official documentation here: OpenTelemetry Collector.

If you add this code to the LangsmithLogCOmmand and run it you should get the trace in the langsmith inside the project you have specified.

Step 5: Adding Nested Runs

When it comes to tracing, LangSmith provides three different primitive data types:

- Project - A Project is a collection of traces. You can think of a project as a container for all the traces that are related to a single application or service. You can have multiple projects, and each project can have multiple traces.

- Trace - A Trace is a collection of runs that are related to a single operation. For example, if you have a user request that triggers a chain, and that chain makes a call to an LLM, then to an output parser, and so on, all of these runs would be part of the same trace. In OpenTelemetry, you can think of a LangSmith trace as a collection of spans. Runs are bound to a trace by a unique trace ID.

- Run - A Run is a span representing a single unit of work or operation within your LLM application. This could be anything from single call to an LLM or chain, to a prompt formatting call, to a runnable lambda invocation. In OpenTelemetry, you can think of a run as a span.

We’ll create two new spans as children of the root span, representing distinct parts of our LLM flow (the LLM call and the output parsing). These will be tracked as separate runs inside the trace.

Here’s the complete updated command with tracing:

1<?php 2 3namespace App\Console\Commands; 4 5use Exception; 6use Illuminate\Console\Command; 7use OpenAI\Laravel\Facades\OpenAI; 8use OpenTelemetry\Contrib\Otlp\OtlpHttpTransportFactory; 9use OpenTelemetry\Contrib\Otlp\SpanExporter; 10use OpenTelemetry\SDK\Trace\SpanProcessor\SimpleSpanProcessor; 11use OpenTelemetry\SDK\Trace\TracerProvider; 12 13class LangsmithLogCommand extends Command 14{ 15 /** 16 * The name and signature of the console command. 17 * 18 * @var string 19 */ 20 protected $signature = 'langsmith:log'; 21 22 /** 23 * The console command description. 24 * 25 * @var string 26 */ 27 protected $description = 'Log trace data to LangSmith for monitoring and analysis'; 28 29 /** 30 * Execute the console command. 31 */ 32 public function handle() 33 { 34 try { 35 $transport = (new OtlpHttpTransportFactory())->create( 36 config('services.langsmith.url') . '/v1/traces', 37 'application/x-protobuf', 38 [ 39 'Content-Type' => 'application/x-protobuf', 40 'x-api-key' => config('services.langsmith.api_key'), 41 'LANGSMITH_PROJECT' => config('services.langsmith.project'), 42 ] 43 ); 44 45 $exporter = new SpanExporter($transport); 46 47 $tracerProvider = new TracerProvider(new SimpleSpanProcessor($exporter)); 48 $tracer = $tracerProvider->getTracer('app'); 49 50 $rootSpan = $tracer->spanBuilder('YugoCitiesFlow')->startSpan(); 51 $rootSpan->setAttribute('langsmith.span.kind', 'chain'); 52 $rootScope = $rootSpan->activate(); 53 54 $openAISpan = $tracer->spanBuilder('OpenAI')->startSpan(); 55 56 $model = 'gpt-3.5-turbo'; 57 $prompt = <<<EOP 58 Your task is to provide the capitals of all six former Yugoslavian countries in the following JSON format: 59 [ 60 { 61 "country": "Country Name", 62 "capital": "Capital Name" 63 }, 64 ... 65 ] 66 67 Ensure the response strictly adheres to the JSON format and includes no extra text or explanation outside the JSON. 68 EOP; 69 70 $openAISpan->setAttribute('langsmith.span.kind', 'llm'); 71 $openAISpan->setAttribute('gen_ai.system', 'OpenAI'); 72 $openAISpan->setAttribute('gen_ai.request.model', $model); 73 $openAISpan->setAttribute('llm.request.type', 'chat'); 74 $openAISpan->setAttribute('gen_ai.prompt.0.content', $prompt); 75 $openAISpan->setAttribute('gen_ai.prompt.0.role', 'system'); 76 77 $result = OpenAI::chat()->create([ 78 'model' => $model, 79 'messages' => [ 80 [ 81 'role' => 'system', 82 'content' => $prompt 83 ], 84 ] 85 ]); 86 87 $openAISpan->setAttribute('gen_ai.completion.0.content', $result['choices'][0]['message']['content'] ?? ''); 88 $openAISpan->setAttribute('gen_ai.completion.0.role', $result['choices'][0]['message']['role'] ?? ''); 89 $openAISpan->setAttribute('gen_ai.usage.prompt_tokens', $result['usage']['prompt_tokens'] ?? 0); 90 $openAISpan->setAttribute('gen_ai.usage.completion_tokens', $result['usage']['completion_tokens'] ?? 0); 91 $openAISpan->setAttribute('gen_ai.usage.total_tokens', $result['usage']['total_tokens'] ?? 0); 92 93 $outputString = $result['choices'][0]['message']['content']; 94 $parsedArray = $this->parseOutputToArray($outputString); 95 96 $parserSpan = $tracer->spanBuilder('OutputParser')->startSpan(); 97 $parserSpan->setAttribute('langsmith.span.kind', 'parser'); 98 $parserSpan->setAttribute('gen_ai.prompt.0.content', $outputString); 99 $parserSpan->setAttribute('gen_ai.prompt.0.role', 'assistant'); 100 $parserSpan->setAttribute('gen_ai.completion.0.content', json_encode($parsedArray)); 101 $parserSpan->setAttribute('gen_ai.completion.0.role', 'output'); 102 103 $rootSpan->setAttribute('gen_ai.usage.prompt_tokens', $response['usage']['prompt_tokens'] ?? 0); 104 $rootSpan->setAttribute('gen_ai.usage.completion_tokens', $response['usage']['completion_tokens'] ?? 0); 105 $rootSpan->setAttribute('gen_ai.usage.total_tokens', $response['usage']['total_tokens'] ?? 0); 106 $rootSpan->setAttribute('gen_ai.completion.0.content', json_encode($parsedArray)); 107 $rootSpan->setAttribute('gen_ai.completion.0.role', 'output'); 108 109 $openAISpan->end(); 110 $parserSpan->end(); 111 $rootSpan->end(); 112 } finally { 113 $tracerProvider->shutdown(); 114 $rootScope->detach(); 115 } 116 } 117 118 protected function parseOutputToArray(string $output): array 119 { 120 try { 121 $decodedOutput = json_decode($output, true); 122 123 if (json_last_error() !== JSON_ERROR_NONE) { 124 throw new Exception('Invalid JSON output: ' . json_last_error_msg()); 125 } 126 127 foreach ($decodedOutput as $index => &$item) { 128 if (!is_array($item) || !isset($item['country'], $item['capital'])) { 129 throw new Exception('Output does not match the expected schema.'); 130 } 131 132 $item['id'] = $index + 1; 133 } 134 135 return $decodedOutput; 136 } catch (Exception $e) { 137 throw new Exception('Error parsing output: ' . $e->getMessage()); 138 } 139 } 140} 141

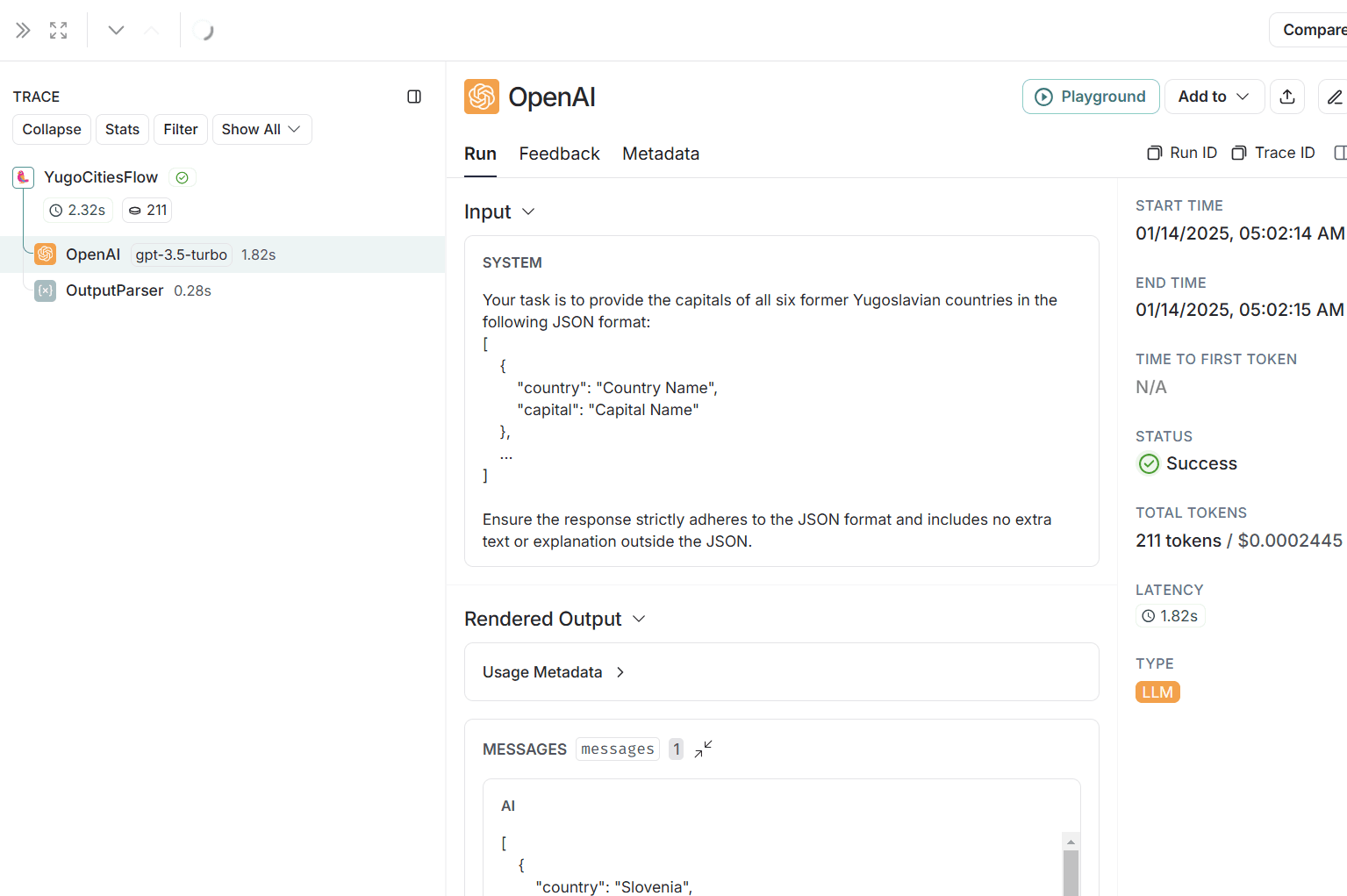

This code defines three key spans to trace the workflow. The YugoCitiesFlow span is the root span, representing the overall process of generating and parsing AI responses. The OpenAI span captures the interaction with the OpenAI API, with attributes like the model used, prompt content, response content, token usage, and completion metadata. The OutputParser span focuses on validating and parsing the API response, with attributes capturing the input JSON and the parsed output. These spans and their attributes provide detailed observability into the flow, linking each step and its context for monitoring and debugging.

On the LangSmith side, you'll see something like this:

Conslusion

By integrating LangSmith and OpenTelemetry into your Laravel application, you can trace the flow of LLM calls and outputs, making it easier to monitor, debug, and optimize your AI-powered workflows. This setup ensures that each step of your process is observable, providing insights into performance, errors, and inefficiencies.

You also might like: